Choose equipment to use with Jamulus

Choose equipment to use with Jamulus  Choose equipment to use with SonoBus

Choose equipment to use with SonoBus FarPlay

Jamulus

SonoBus

Soundjack

BETA Zoom LPA

1-page general reference

Troubleshooting

Legacy documentation

1-page general reference

Troubleshooting

Legacy documentation

This website is dedicated to the New Jersey Gay Men's Chorus

A $20 Ethernet cable and a $20 USB-Ethernet adapter together serve as the foundation of a typical low-latency audio setup. For many musicians using typical home networks, thinking that a particular software application is the key to achieving low-latency audio while forgetting to set up an Ethernet connection is well on its way to being as senseless as thinking, back in the 1990s, that Microsoft Encarta was going to provide a multimedia encyclopedia while forgetting to buy a CD-ROM drive, soundcard, and speakers.

To skip past reading a wall of text and illustrations, go to the posters and video at the end of the page.

When we speak or sing together over the internet, audio is represented and conveyed in multiple formats.

For example, a musician can use an acoustic instrument, like a voice, to send out traveling waves of density and pressure variation in air. A diaphragm in a microphone responds by vibrating, generating fluctuations in electrical voltage in a cable that are sensed by a USB Audio Interface. At regular intervals in time, the instantaneous voltage signal amplitude is rounded to the nearest level that can be represented using, say, 16 bits. Each bit is either a zero or a one, and each rounded instantaneous amplitude is called a sample. At regular intervals, the sender’s computer fetches from the USB Audio Interface a group of samples for use by an audio application. The number of samples fetched as a group is called the size of the sample buffer.

The audio application packages some samples into a packet that is sent through the sender’s home network to their router and then though the sender’s internet service provider to very high-bandwidth backbone connections physically relying on fiber optic connections. Eventually, the packets hopefully reach the receiver’s internet service provider, the receiver’s router, and, by way of the receiver’s home network, finally, the receiver’s computer.

At the receiver’s computer, the audio application handles the received bits of audio data, which are sent to the receiver’s USB Audio Interface for conversion to a fluctuating voltage signal that drives corresponding electrical current fluctuations that cause the diaphragm in a headphone to vibrate, sending sound waves to a receiver’s ear.

A second copy of the signal chain illustrated here runs, at the same time, in reverse, when each individual participating in a call is at once both a sender and a receiver, in other words, at once both a music maker and a music listener.

Nothing we’ve discussed in this illustration is unique to a low-latency audio connection. All of the features of this illustration can be considered when setting up a high-latency call, as is typically done, for example, on Zoom.

The sender’s computer transmits outbound packets. The packets have to travel through various networks. The packets are then sorted at the receiver’s computer in a portion of computer memory called a jitter buffer, represented below as a conveyor belt. The packets exiting the conveyor belt are opened, and the contained bits are sent onward for playback.

Some packets travel across distance more quickly, and some travel more slowly. We make two points. For one, there is such a thing as a fastest packet or group of equally fast fastest packets. And, second, there are packets that take longer than the fastest packet to complete their journeys.

In vacuum, light travels at Mach 870,000, which is a fancy way of saying that in vacuum, light travels at a speed that is 870,000 times the speed of sound.

A teaching cartoon that can be used in high school physics courses cites absorptions and emissions of photons, which are particles of light, to explain that light travels more slowly through materials than through a vacuum. A vacuum provides a clear, unobstructed path for the movement of a photon, but a photon traveling in a condensed phase material, like the glass that optical fiber data links are made from, undergoes a series of so-called "stopovers" as the photon is repeatedly absorbed and emitted by the glass. So, light traveling through optical fiber travels more slowly than in vacuum, at a speed of about Mach 580,000.

When the 25-ms one-way delay often mentioned in discussions of low-latency audio is achieved, maybe ballpark half-ish of the 25-ms delay is accrued just from the non-zero minimum journey duration that just cannot be escaped thanks to the finite speed of light.

The scaling of some of the processes in the figure below is not meant to be represented very accurately. The main point is to show about 10 ms of delay coming from signal propagation over a finite distance at the finite speed of light and jitter of comparable magnitude.

Pairs of cities that are connected by one-way transit durations of up to about 10 ms can be identified by exploring an interactive map that visualizes data from WonderProxy.

The finite speed of light means that data must take a non-zero amount of time to travel a finite distance. Minimizing the minimum journey duration imposed by the speed of light can be accomplished by minimizing the distance between participants in a low-latency audio call (in practice, by keeping participants within several hundred miles of each other).

Even when two participants in a call are geographically close to each other, some packets of data can take especially long to arrive.

When received packets of audio data are loaded onto a longer conveyor belt, which is to say, a bigger jitter buffer, before playback, the longer durations of time that packets typically take to ride the conveyor belt offer grace so that particularly late-arriving packets still have a chance to sneak into their places to be played back at their correct times. In the figure below, scenario one shows a longer conveyor belt. In this scenario, the green packet just now arriving at the receiver's computer still has a chance to sneak into its position behind the blue packet so as to be played after the blue packet is played. Using a longer conveyor belt promotes signal integrity.

When received packets of audio data are loaded onto a shorter conveyor belt, which is to say, a smaller jitter buffer, packets typically spend less time riding the conveyor belt before playback. In the figure above, the yellow packet arrived at the same time in both scenarios (one with a longer conveyor belt and the other with a shorter conveyor belt), but the yellow packet riding along the shorter conveyor belt is about to be played whereas the yellow packet riding along the longer conveyor belt still has to wait a while before finally reaching the output end of the longer conveyor belt and finally being played. A shorter conveyor belt offers lower latency.

In the presence of packet-to-packet variation in journey duration, signal immediacy and integrity come at each other’s expense. The size of the jitter buffer is adjusted to choose a compromise mixture of lagginess and crackliness.

What can we do to reduce packet-to-packet variation in journey duration, and, so, the size of the jitter buffer we are forced to use to achieve a desired amount of signal integrity?

Jitter is contributed at multiple steps along the signal chain. One factor that can sometimes be dominant and, fortunately, also controllable, is variable turn-taking delay in the transmission of data between a computer and a router on a home network.

When multiple devices in a household need to transmit data over a portion of radio spectrum, the devices try to take turns to avoid making too many simultaneous transmissions. This is loosely reminiscent of the way in which multiple people in a conversation need to be careful to avoid all talking over each other at once lest they create a completely unintelligible cacophony.

Wi-Fi standards implement strategies for managing how multiple household devices share radio spectrum. We can loosely visualize such strategies as strategies for managing traffic at a small road junction that doesn’t have enough room to be simultaneously crossed by all the cars that might simultaneously reach the entrances feeding into the junction.

A computer running a low-latency audio application needs to frequently transmit small portions of data. On a shared home wireless network, there could indeed be times when the computer gets lucky and encounters shared radio spectrum that just happens to be clear of competing traffic. So, sometimes, the computer gets to transmit data immediately.

Unfortunately, with older Wi-Fi standards like Wi-Fi 5 (also known as 802.11ac), it’s easy for the limited shared radio spectrum to happen to be fully occupied by other data transmission in progress, possibly forcing the computer to wait a substantial duration for other traffic to clear shared radio spectrum. Please beware that the cartoons on this page are not drawn to scale. The entirety of shared radio spectrum managed by a household router might be more accurately represented as being big enough for a small number of cars, rather than merely big enough for just one, but the main point is still the same: older Wi-Fi standards make it easy for shared spectrum to be congested so that it’s common for a computer needing to transmit data to need to wait to transmit. With Wi-Fi 5 and older, some packets might be transmitted with little delay in the home network, but other packets might be transmitted with very large delay in the home network, so, using Wi-Fi 5 and older Wi-Fi standards can introduce substantial jitter to a data stream.

A user comfortable tinkering with technology can be interested in spending time to experiment with assigning a computer to a 5 GHz wireless band and assigning other household devices to a 2.4 GHz wireless band to try to eliminate wireless network traffic conflicts involving the computer. It is, in fact, possible to run a low-latency audio call over an older Wi-Fi network. However, this strategy is not assured to work, and trying to make low-latency audio work over an older Wi-Fi network can consume mental energy, so a consultant being paid to help clients set up a reliable low-latency audio configuration should professionally say that using Wi-Fi 5 and older Wi-Fi standards is contraindicated.

A home network constituted by devices capable of using Wi-Fi 6, also known as 802.11ax, or newer can be configured to manage competing traffic more effectively. With correct settings, the shared radio spectrum is divided into a relatively large number of relatively small portions. By allocating small portions of radio spectrum to individual transmissions from different devices, limited shared radio spectrum can be more easily simultaneously occupied by multiple device transmissions than when using Wi-Fi 5 and older. Speaking loosely and metaphorically, and with no intention of creating a representation drawn to scale, a small patch of pavement might provide enough room for individuals from multiple groups to cross simultaneously as pedestrians even when that same small patch of pavement doesn’t provide enough room for more than one car of passengers to cross at a time.

With Wi-Fi 6, as compared with Wi-Fi 5, a computer is more likely to find a portion of radio spectrum free for it to use to transmit data even while other competing data transmission is ongoing. With Wi-Fi 6 and newer Wi-Fi standards, the computer is more likely to be able to transmit the vast majority of packets within a parade of packets with about the same delay, and, so, with much less jitter. It is possible to run low-latency audio over Wi-Fi when a properly configured Wi-Fi 6 or newer configuration is used.

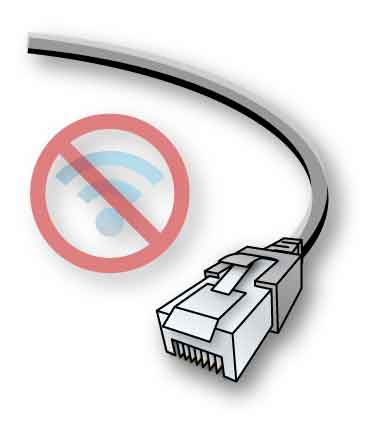

An alternative solution completely foregoes using Wi-Fi to transmit low-latency audio data in the home network. Connect the computer running the low-latency audio application directly to the router using an Ethernet cable. A modern full-duplex Ethernet connection does not contribute any turn-taking delay to communication between the computer and the router.

Even though there are ways to make low-latency audio work over Wi-Fi, a paid consultant should always advise a client to set up low-latency audio technology using Ethernet. Giving in to a client’s wishes to use Wi-Fi can leave an equipment configuration vulnerable to interference and other avoidable issues in the future. Exposing a client to such risks might be considered unprofessional when a consultant is paid to help a client work toward the best possible long-term experience.

The finite speed of light means that light takes a non-zero duration to travel across a finite distance. We can control this contribution to signal delay by limiting the geographic distance between participating sites in a low-latency audio call. Fastest-packet one-way transit delays of several or about 10 ms are realistically achievable.

Packet-to-packet variation in journey duration requires that we use a jitter buffer of finite size (or, so to speak, a “conveyor belt” of finite length) if we want to control the prevalence of crackly dropouts. When all computers in a low-latency call are connected to their routers using Ethernet, the jitter in each received audio stream can require only a jitter buffer size of several, or, maybe 10-ish ms, rather than the more like 30 ms possibly needed when a session participant is using a not-so-well equipped and configured Wi-Fi home network.

Taking the considerations we have discussed, and others we haven’t, into account often allows musicians to achieve a one-way mouth-to-ear delay of about 25 milliseconds.

Is 25 ms of mouth-to-ear delay good enough for making music over the internet?

A musician can find that analog zero-latency monitoring is the only way they can tolerate listening to their self-signal (or “sidetone”).

A round-trip delay of, for example, 10 ms can be incurred when audio passes through a computer. Vocal talent might find that a live monitor signal delayed by even just these 10 ms can be annoying, except perhaps if the delayed monitoring signal is used only for playing back digital reverb and similar ambience effects that are mixed on top of a zero-latency primary sidetone.

When musicians use low-latency audio technology to connect to each other from nearby States, they can sometimes achieve a one-way latency of 25 ms.

If musicians who use digital audio workstations already know that even just 10 ms of latency can already be annoying, how is there any chance of naturally making music online with an even greater 25 ms of latency?

Not all types of ms of delay feel the same. 10 ms of delay from YOUR mouth to YOUR own ears can feel unpleasant even while 25 ms of delay from YOUR mouth to SOMEONE ELSE’s ears can feel OK.

25 ms of delay from your mouth to someone else’s ears corresponds to about 25 feet of physical separation in a shared room, which is admittedly large as far as studio work is concerned, but unremarkable as far as choral work is concerned.